Machine Learning is the process of teaching machines and deep learning does the same but uses the neurons to build the structure of the model. The structure consists of multiple layers of neurons and the machines use them to perform multiple iterations to train the models. The user trains the model using the dataset consisting of the target values for the model to understand the hidden patterns from them.

Table of Content

This guide explains the following sections:

- What is a Loss Function

- What is Binary Cross Entropy (BCE) Loss

- How to Get BCE Loss in PyTorch

- Prerequisites

- Example 1: Get BCE Loss

- Example 2: Get Functional BCE Loss

- Example 3: Get BCE With Logits Loss

- Example 4: Using pos_weight in BCE With Logits Loss

- Conclusion

What is a Loss Function

The artificial intelligence models are given the target values based on the historical facts and the model has to extract the predictions for future events. After that, the difference is calculated between the given values and the predicted ones using the loss function. Using the loss value enables the user to optimize the model to get a better prediction next time by minimizing the loss value.

What is Binary Cross Entropy (BCE) Loss

The BCE function takes the probabilities that the model will predict each value correctly for each row of the dataset. Then, it will take the concept of corrected probability which means that there is a possibility of all the observations falling in its original class. Once the corrected probability is calculated the loss function takes the logarithm (log) of each row. After that, the BCE function simply adds the values of the logarithm to get the loss of a complete dataset.

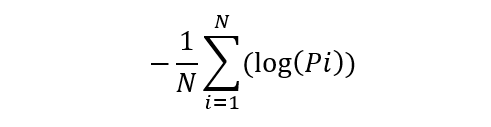

The mathematical representation of the Binary Cross Entropy loss function is as follows:

N: Total number of rows

Pi: The corrected probability

How to Get BCE Loss in PyTorch

To get the BCE loss value in the PyTorch model, simply go through the following section to complete the requirements for using the loss functions. After that, the guide implements the BCE functions in PyTorch with the help of multiple examples:

The Python code for the examples used in this guide can be accessed from here:

Prerequisites

The prerequisite section explains the requirements for using the Binary Cross Entropy or BCE loss functions:

- Access Python Notebook

- Install Modules

- Import Libraries

Access Python Notebook

Open the Python notebook by clicking on the “New Notebook” button from the official website:

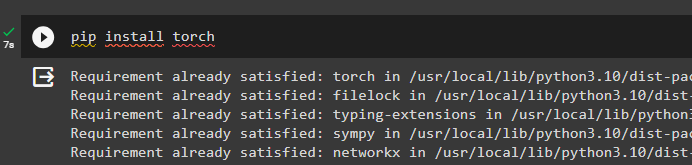

Install Modules

In the notebook, start implementing the Python code to install the torch using the pip command:

pip install torch

Import Libraries

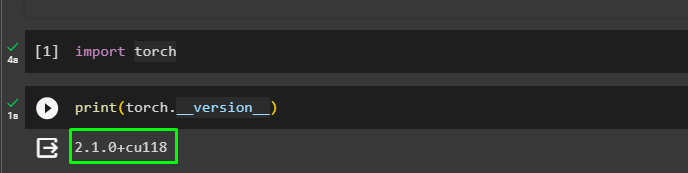

Import the torch library for using its functions to get the BCE loss in PyTorch:

import torchCheck the installed version of the torch by executing the following code on the Python notebook:

print(torch.__version__)

Example 1: Get BCE Loss

The first example returns the BCE loss using the functions from the torch library as the following syntax explains the process:

Syntax

The BCELoss() method is offered by the torch library with its different arguments as mentioned below:

torch.nn.BCELoss(weight=None, size_average=None, reduce=None, reduction='mean')Arguments:

- weight: it is an optional parameter for the tensors describing the manual rearrangement of weights to the given loss to each batch element.

- size_average: contains the boolean value like True or False and is optional as well to get the average loss of elements from the batch

- reduce: another boolean value which is optional as well to get the sum or average of the losses for mini batches according to the size_average

- reduction: it is a string value explaining the reduction for the output with values like mean, sum, none, etc.

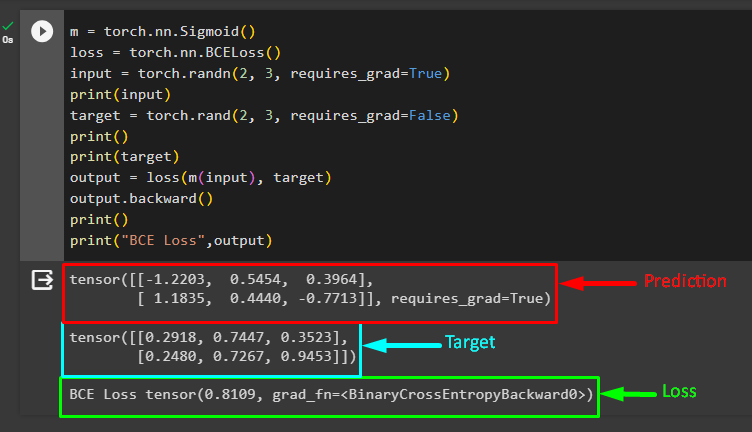

The following is the example that uses the BCE() method to get the loss of the corrected probabilities:

m = torch.nn.Sigmoid()

loss = torch.nn.BCELoss()

input = torch.randn(2, 3, requires_grad=True)

print(input)

target = torch.rand(2, 3, requires_grad=False)

print()

print(target)

output = loss(m(input), target)

output.backward()

print()

print("BCE Loss",output)The above code suggests:

- The sigmoid function creates an S-shaped curve dividing the values into different classes to get the values in a specific range.

- Create a sigmoid function to store the values within the range from 0 to 1 and store them in the m variable.

- Call a BCELoss() method with the torch library and store it in the loss variable to be invoked later in the example.

- Create an input tensor that requires gradient computations and a target tensor that does not require gradient computations. Both the tensors should have the same dimensions so they can be compared to get the loss value.

- Call the loss function to evaluate the sigmoid of the input and the target to get the value representing the loss

- Apply backpropagation using the backward() method to get the gradient of the loss concerning the input dataset:

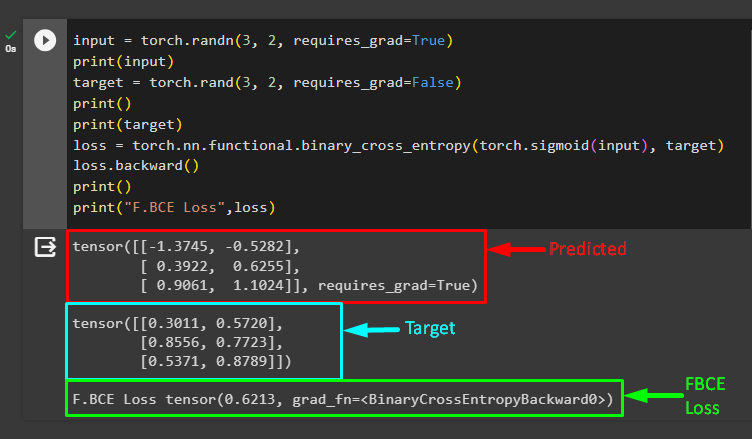

Example 2: Get Functional BCE Loss

Create two tensors with the dimensions of 3×2 one for input and one for target values. Use the torch library with the functional.binary_cross_entropy() method to calculate the loss value using the sigmoid() to the input and target values. Apply backpropagation to get the gradients of the loss and minimize it for the next iterations:

input = torch.randn(3, 2, requires_grad=True)

print(input)

target = torch.rand(3, 2, requires_grad=False)

print()

print(target)

loss = torch.nn.functional.binary_cross_entropy(torch.sigmoid(input), target)

loss.backward()

print()

print("BCE Loss",loss)

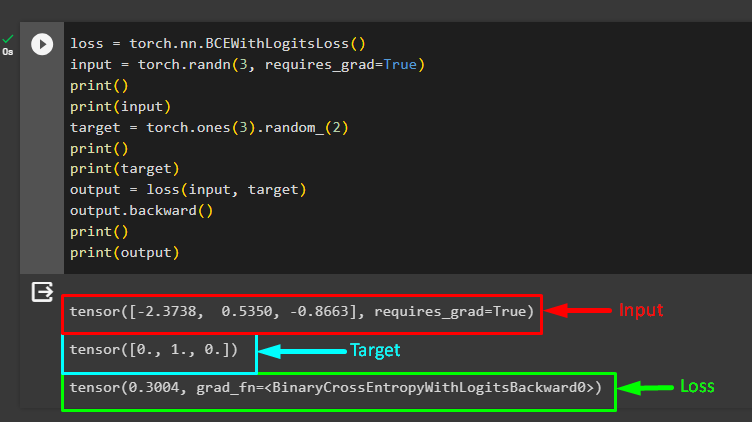

Example 3: Get BCE With Logits Loss

Call the BCEWithLogitsLoss() method in the loss variable so the variable can be called to find the loss in PyTorch. Create an input tensor with 3 random values and then a target tensor with 2 random values and a 1 and return both of them on the screen. Call the loss variable containing the input and target tensors in its arguments to call the loss function:

loss = torch.nn.BCEWithLogitsLoss()

input = torch.randn(3, requires_grad=True)

print()

print(input)

target = torch.ones(3).random_(2)

print()

print(target)

output = loss(input, target)

output.backward()

print()

print(output)

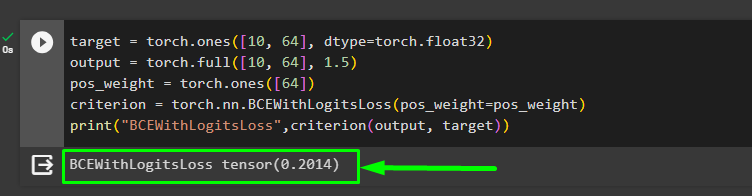

Example 4: Using pos_weight in BCE With Logits Loss

The following example combines the sigmoid layer and the BCE loss methods for managing binary classification problems:

target = torch.ones([10, 64], dtype=torch.float32)

output = torch.full([10, 64], 1.5)

pos_weight = torch.ones([64])

criterion = torch.nn.BCEWithLogitsLoss(pos_weight=pos_weight)

print("BCEWithLogitsLoss",criterion(output, target))Here:

- Create a 2D tensor named target that contains only ones and another 2D tensor specifying the output values filled with 1.5 values.

- The pos_weight variable contains a 1D tensor filled with 1s representing the weights for the positive values.

- The criterion variable calls the loss function with the pos_weight arguments in the method.

- Displays the loss value by calling the criterion() method with the input and target variables:

That’s all about the process of getting the binary cross-entropy loss in PyTorch.

Conclusion

To get the BCE loss in PyTorch, import the torch library to use the BCELoss(), BCEWithLogitsLoss(), functional.binary_cross_entropy(), etc. The sigmoid function is used to rearrange the values of the tensors within the range from 0 to 1. The BCE loss functions can be used to get loss from the actual and predicted values stored in tensors using PyTorch. This guide has elaborated on the process of getting the loss using the BCE functions in PyTorch.