PyTorch is the framework for designing and training Deep Learning models to make better predictions using neural network architecture. These models are evaluated by comparing the predictions with the actual training data and finding the difference between them. Sometimes, the training data does not have balanced classes or fields so the resultant model provides wrong predictions. This problem provides better accuracy and minimum loss but also contains the obvious wrong predictions.

Table of Content

This guide explains the following sections:

- What is NLL Loss

- How to Calculate NLL Loss in PyTorch

- Prerequisites

- Example 1: Using NLLLoss() With 1D Data

- Example 2: Using NLLLoss() With 2D Data

- Example 3: Using Functional NLL Loss

- Example 4: Using Heteroscedastic GaussianNLLLoss()

- Example 5: Using Homoscedastic GaussianNLLLoss()

- Conclusion

What is NLL Loss

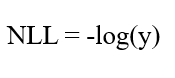

The Negative Log Likelihood is generally used with the softmax() method that normalizes the actual values between 0 and 1. The log of all these values will be negative and the negative sign is used to get the positive final values for the loss. The sum of all the normalized values equals 1 and the following is the representation of the NLL function:

How to Calculate NLL Loss in PyTorch

The PyTorch framework offers multiple methods like NLLLoss() and GaussianNLLLoss() to calculate the negative Log Likelihood loss. The softmax function is used with the NLL loss in PyTorch to convert the values within a range between 0 to 1. To calculate the NLL loss in the PyTorch environment, go through the following sections:

Note: The code used in this guide which is written in Python programming language is available here:

Prerequisites

Before getting into the example for calculating the NLL loss in PyTorch, it is required to follow these steps:

- Access Python Notebook

- Install Modules

- Import Libraries

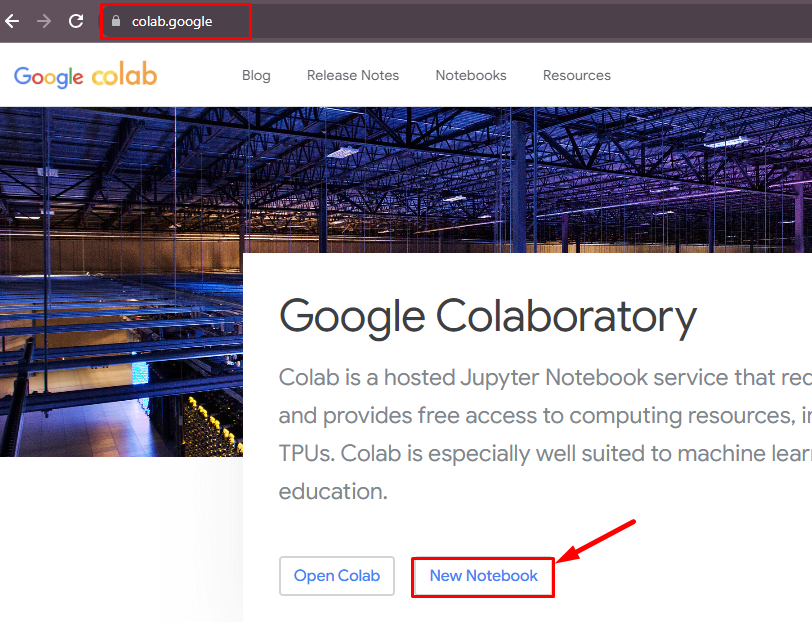

Access Python Notebook

To write the Python code, we need to access a notebook that can understand the code like the Google Colab from the official website:

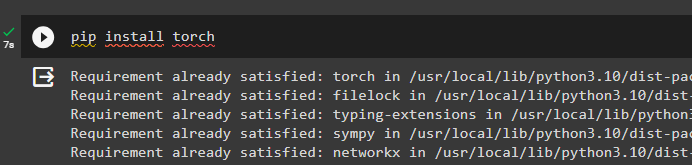

Install Modules

In the Google Colab notebook, execute the following code to install the dependencies available in the torch library. The pip command is being used here, containing the packages like torch library that can be used in the Python language as the user can install them easily:

pip install torch

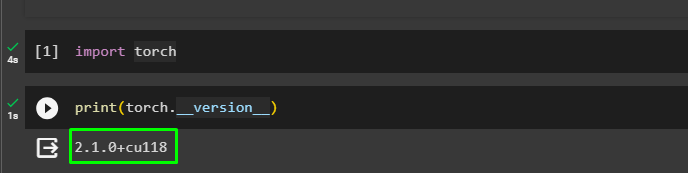

Import Libraries

Once the module is installed for the session in the colab notebook, simply import its library using the following command:

import torchPrint the installed version of the torch by using the torch.__version__ command to verify that the environment is available for the session:

print(torch.__version__)

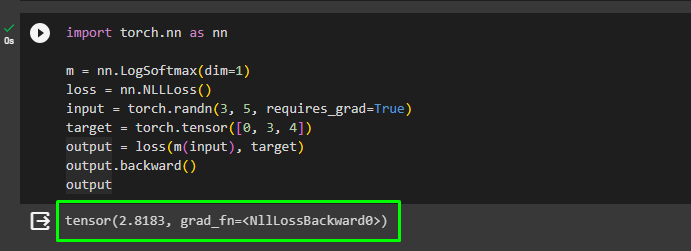

Example 1: Using NLLLoss() With 1D Data

The first example uses the NNLLoss() method from the torch library to get the value of the loss from the one-dimensional data. Call the LogSoftmax() method and store it in the m variable to then store the NLLLoss() method in the loss variable. Both these methods can be called by using their respective variables after building the tensors as input and target. Call the loss function with the sigmoid function converting the values of the input and calculating the loss with the target values:

import torch.nn as nn

m = nn.LogSoftmax(dim=1)

loss = nn.NLLLoss()

input = torch.randn(3, 5, requires_grad=True)

target = torch.tensor([0, 3, 4])

output = loss(m(input), target)

output.backward()

outputCall the output variable that contains the loss value stored as the tensor to display it on the screen:

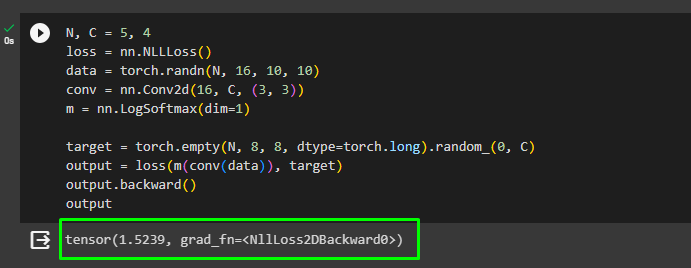

Example 2: Using NLLLoss() With 2D Data

The second example takes the NLL loss with the two-dimensional data using the image data and then applies the loss method. Create the tensor with the input size using the N x C x height x width and apply the convolution method using the input values. Call the LogSoftmax() method to convert the input tensor to a normalized form. After that, apply the backpropagation using the backward() method to minimize the loss value and make the model better:

N, C = 5, 4

loss = nn.NLLLoss()

data = torch.randn(N, 16, 10, 10)

conv = nn.Conv2d(16, C, (3, 3))

m = nn.LogSoftmax(dim=1)

target = torch.empty(N, 8, 8, dtype=torch.long).random_(0, C)

output = loss(m(conv(data)), target)

output.backward()

output

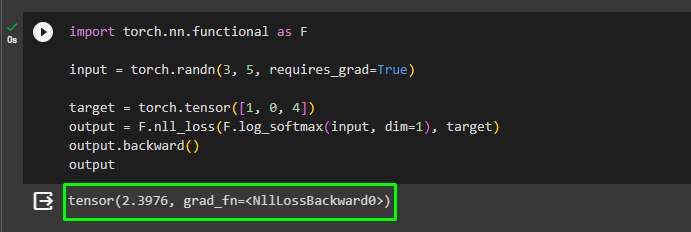

Example 3: Using Functional NLL Loss

Import the functional dependency as the F for calling the methods provided by the framework and create two tensors like input and target. The input tensor is two-dimensional and the target takes one field with three columns to check with the input tensor. Call the functional.nll_loss() method with the softmax() function to alter the input values and find its loss with the target values:

import torch.nn.functional as F

input = torch.randn(3, 5, requires_grad=True)

target = torch.tensor([1, 0, 4])

output = F.nll_loss(F.log_softmax(input, dim=1), target)

output.backward()

output

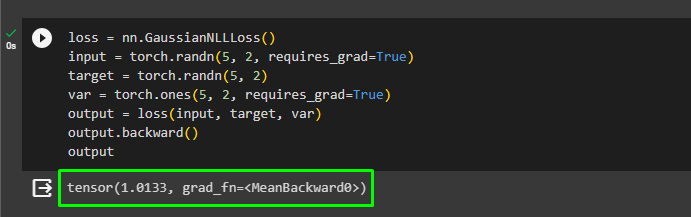

Example 4: Using Heteroscedastic GaussianNLLLoss()

This example uses the heteroscedastic Gaussian nll loss in PyTorch which means that the variable in the predicted value is not constant. Apply the GaussianNLLLoss() method stored in the loss variable and then create two tensors like input and target with multi-dimensions structure. Create the var tensor to use the heteroscedastic Gaussian loss with all the tensors stored in the output variable:

loss = nn.GaussianNLLLoss()

input = torch.randn(5, 2, requires_grad=True)

target = torch.randn(5, 2)

var = torch.ones(5, 2, requires_grad=True)

output = loss(input, target, var)

output.backward()

output

Example 5: Using Homoscedastic GaussianNLLLoss()

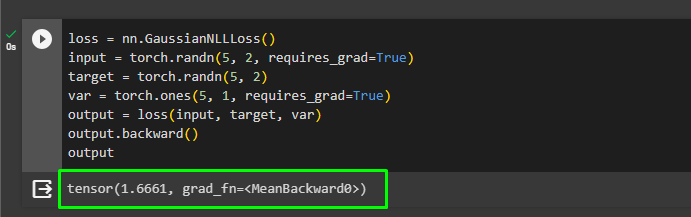

The homoscedastic approach means that the variance of the predicted values remains constant and the GaussianNLLLoss() can be applied to it using the PyTorch module. The only change in this example from the previous one is the creation of the “var” variable with the 5×1 dimensions. Call the output variable to display the value of the loss evaluated by the loss variable:

loss = nn.GaussianNLLLoss()

input = torch.randn(5, 2, requires_grad=True)

target = torch.randn(5, 2)

var = torch.ones(5, 1, requires_grad=True)

output = loss(input, target, var)

output.backward()

output

That’s all about the process of calculating the Negative Log Likelihood Loss in PyTorch.

Conclusion

To calculate the Negative Log Likelihood or NLL loss in PyTorch, install the torch framework using the pip command to get its dependencies. The torch dependencies are installed to use the NLLLoss() and are functional.nll_loss() methods to calculate the loss value in PyTorch. The torch library also allows the user to use the GaussianNLLLoss() method to evaluate the loss using the Homoscedastic and Heteroscedastic variance. This guide has elaborated on the process of calculating the Negative Log Likelihood loss in PyTorch.