PyTorch is the framework used to configure and train Deep Learning(DL) models using diverse datasets. These models are designed to gain useful insights or understand the hidden patterns in the dataset. The trained machine predicts future events based on the historical data given to the model at the time of the training. The input values and the predicted values are compared to evaluate the performance of the model.

Table of Content

This guide explains the following sections:

- What is KL Divergence Loss in PyTorch

- How to Calculate KL Divergence Loss in PyTorch

- Prerequisites

- Example 1: Using KLDivergence() Method

- Example 2: Using Functional KLDivLoss() Method

- Example 3: Using KLDivLoss() Method With log_target

- Example 4: Using Softmax with KLDivLoss() Method

- Conclusion

What is KL Divergence Loss in PyTorch

The Kullback-Leibler(KL) divergence is the evaluation method that finds the difference between one probability to the other probability. The idea is to minimize the difference as the higher divergence means that the model hasn’t predicted the data effectively. The lowest divergence between both the probabilities specifies a better accuracy and the model is good here. The mathematical representation of the KL divergence is mentioned below:

Evaluation of the negative sum of probabilities can be done using the following equation:

Removing the negative sign before the sum from the equation makes it a positive sum of probabilities.

How to Calculate KL Divergence Loss in PyTorch

PyTorch enables the user to calculate the KL divergence using different methods like KLDivergence(), KLDivLoss(), etc. These methods can be used once the torch library is imported into the Python programming language. To learn the process of calculating the KL divergence loss in PyTorch with multiple examples, go through the following guide:

Note: The Python code used in this guide can be accessed from here:

Prerequisites

Before getting into the process of calculating the KL divergence in PyTorch, go through the following steps:

- Access Python Notebook

- Install Modules

- Import Libraries

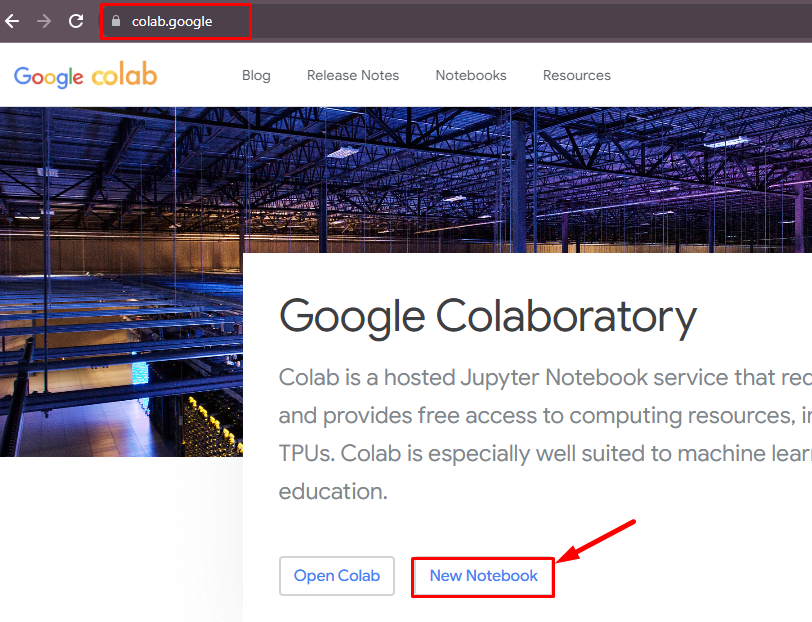

Access Python Notebook

The first step to get started with this guide is to open a new notebook from the official Google Colaboratory page:

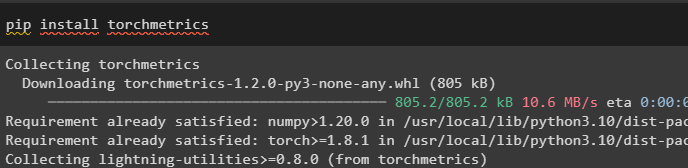

Install Modules

Install the torchmetrics framework to get the torch libraries from the pip command which manages the Python modules:

pip install torchmetrics

Import Libraries

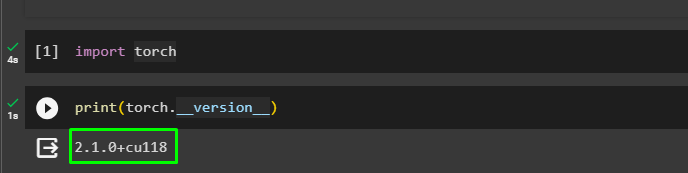

Once the module is installed successfully, simply import the torch library to call the KLDivergence() and KLDivLoss() methods:

import torchPrint the installed version of the torch module to confirm that it is available for use:

print(torch.__version__)

Example 1: Using KLDivergence() Method

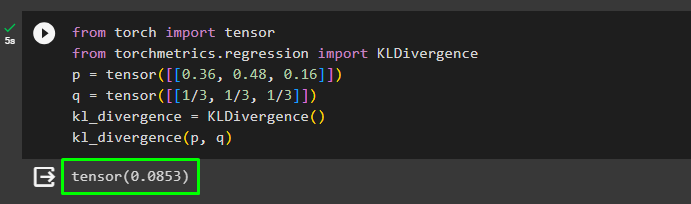

Import the tensor from the torch library to create datasets in PyTorch and the KLDivergence library from the torchmetrics framework to get the loss value. Configure two tensors with different values stored in the p and q variables to get the distance between them using the KLDivergence() method. Call the method and store it in the kl_divergence variable to calculate the divergence and print it on the screen:

from torch import tensor

from torchmetrics.regression import KLDivergence

p = tensor([[0.36, 0.48, 0.16]])

q = tensor([[1/3, 1/3, 1/3]])

kl_divergence = KLDivergence()

kl_divergence(p, q)The following screenshot displays the loss value stored as the tensor in the kl_divergence variable:

Example 2: Using Functional KLDivLoss() Method

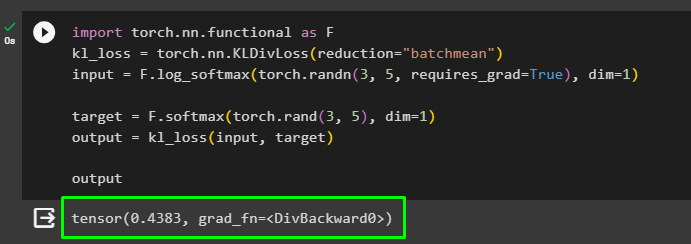

Another method that can be used to calculate the KL divergence loss in PyTorch is the KLDivLoss from the functional dependency of the torch. Call the method and store its value in the kl_loss variable with the parameter reduction containing the “batchmean” value. The reduction batchmean means that the sum of values should be divided by the total number of instances or values. Build two tensors with random values using the softmax() method to convert the values in a specified range:

import torch.nn.functional as F

kl_loss = torch.nn.KLDivLoss(reduction="batchmean")

input = F.log_softmax(torch.randn(3, 5, requires_grad=True), dim=1)

target = F.softmax(torch.rand(3, 5), dim=1)

output = kl_loss(input, target)

outputCall the kl_loss variable to apply the method on the tensors to find the loss between them and print it on the screen:

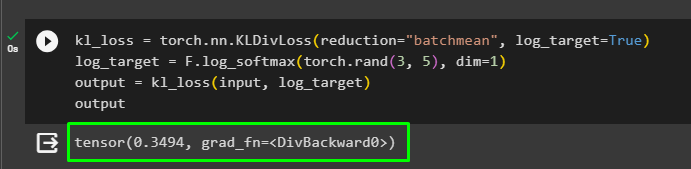

Example 3: Using KLDivLoss() Method With log_target

Call the KLDivLoss() method from torch with the reduction and log_target parameters and store it in the kl_loss variable. The log_target is False by default and set to True to pass the distributions in the log space to avoid problems caused by the explicit logs. After that, simply call the softmax() method to store the tensor as the log_target and calculate its divergence from the input dataset created in the previous example:

kl_loss = torch.nn.KLDivLoss(reduction="batchmean", log_target=True)

log_target = F.log_softmax(torch.rand(3, 5), dim=1)

output = kl_loss(input, log_target)

output

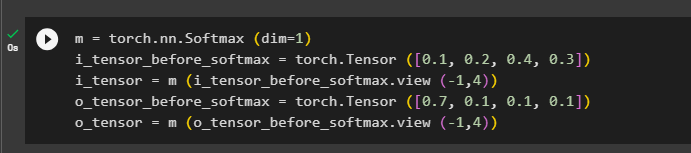

Example 4: Using Softmax with KLDivLoss() Method

Call the softmax() with the dim parameter and store it in the m variable to use it while creating the tensors to keep the values within a certain limit. Create 2 input tensors and two output tensors each containing a tensor with and without the softmax() method:

m = torch.nn.Softmax (dim=1)

i_tensor_before_softmax = torch.Tensor ([0.1, 0.2, 0.4, 0.3])

i_tensor = m (i_tensor_before_softmax.view (-1,4))

o_tensor_before_softmax = torch.Tensor ([0.7, 0.1, 0.1, 0.1])

o_tensor = m (o_tensor_before_softmax.view (-1,4))

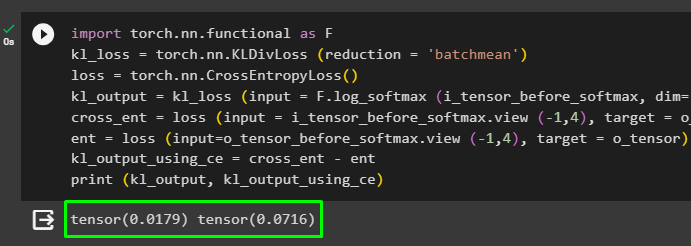

Get the functional dependency as F and use it to call the functional KLDivLoss() method and store it in the kl_loss variable. Use the CrossEntropyLoss() method stored in the loss variable to get the loss from the previously created tensors. Use the kl_loss variable with the input and target datasets to extract the difference between them and store it in the kl_output. Create cross-ent and ent variables to find the loss() method with the softmax() method and find the difference between them:

import torch.nn.functional as F

kl_loss = torch.nn.KLDivLoss (reduction = 'batchmean')

loss = torch.nn.CrossEntropyLoss()

kl_output = kl_loss (input = F.log_softmax (i_tensor_before_softmax, dim=-1), target = o_tensor)

cross_ent = loss (input = i_tensor_before_softmax.view (-1,4), target = o_tensor)

ent = loss (input=o_tensor_before_softmax.view (-1,4), target = o_tensor)

kl_output_using_ce = cross_ent - ent

print (kl_output, kl_output_using_ce)Print the kl_output and kl_output_using_ce to get the KL divergence loss and cross-entropy losses as displayed in the following screenshot:

That’s all about the process of calculating the KL divergence loss in PyTorch.

Conclusion

To calculate the KL divergence loss in PyTorch, install the torchmetrics to import the torch library for using the methods offered by the module. The platform enables the user to find the KL divergence loss using the KLDivergence() and KLDivLoss() methods. This guide has elaborated the process in detail with multiple examples using both the methods and the comparison with cross-entropy loss.