LangChain builds Language models and chatbots using its dependencies that enable the users to ask targeted queries from the chatbot. The bots have a chat user interface in which the user sends a message asking questions and the model responds accordingly. The models are more effective than the internet as they answer the question with a to-the-point approach. However, the model needs to be trained on the latest dataset so the noise can be avoided in the response.

LangChain also enables the developers to build models using external help like an OpenAI environment and functions to get answers in a structured format. OpenAI models like DALL-E, GPT-3.5, and many others are updated as the platform has added the ability to call the functions. The LangChain model has the ability to call the OpenAI functions when a user asks questions in human language.

How to Use Tools in LangChain that Calls the OpenAI Functions

The OpenAI functions can be used with the Language models in the LangChain to get the optimized performance of the model. The user simply asks questions in natural languages like English, etc. to the model and the agent that controls the performance is responsible for getting the responses. If the agent thinks that the OpenAI functions are required to get answers quickly, then it simply calls that function.

In order to use the OpenAI functions, the language model needs to be integrated with the OpenAI functions that can be imported using the LangChain dependencies. Once the agent is built with the language model and the functions, it simply uses the functions whenever the process requires any of them. The blog uses the Python scripts in the Google Collaboratory Notebook and its complete code can be accessed here.

To go through the detailed implementation of the process of using tools that call the OpenAI functions, simply follow the steps below:

Step 1: Install Modules

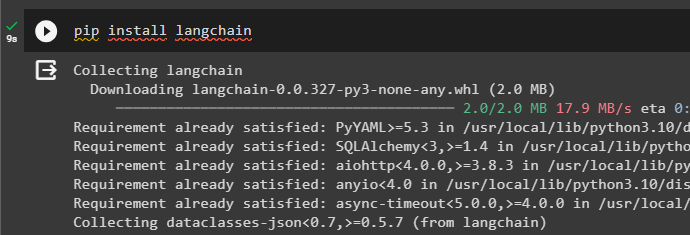

First, install the LangChain modules by executing the following code to get its dependencies for building the language model. The dependencies are also required to use the OpenAI functions while building and using the LangChain tools:

pip install langchain

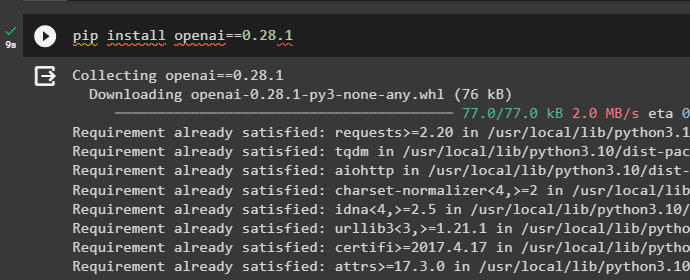

Install the OpenAI framework that can be used to access its environment and functions as well. The OpenAI environment can be accessed while building the language model to get the answers from the agent:

pip install openai==0.28.1

Step 2: Setup Environments

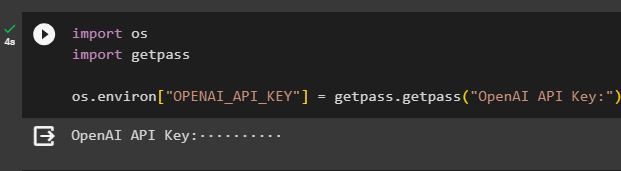

Setting up the OpenAI environments can be done by executing the following code in the Python Notebook. The user needs to get the API key from the OpenAI account after getting its subscription and then provide the key in the code. Executing the code will ask the user to enter the API key and then hit the enter key from the keyboard to complete the process:

import os

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 3: Importing Libraries

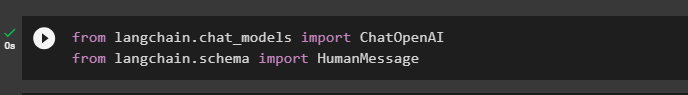

Now that the environment is set and the modules are installed, use the dependencies of the LangChain like chat_models and schema to get the libraries. The libraries that are required for this guide are ChatOpenAI to build the language model and HumanMessage to input the structured input to the model:

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage

Step 4: Building Language Model

The OpenAI platform offers multiple models that can call OpenAI functions in LangChain like GPT-4, GPT-3.5, GPT-base, and others with their updated variants. Multiple versions are formed to keep the number of tokens in check and avoid their misuse in language models. To learn about the models offered by the OpenAI which can be used to call the functions, click on this guide.

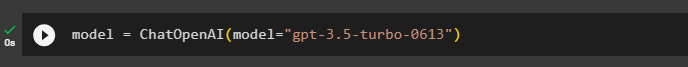

The following code is used to build the language model using the GPT-3.5-turbo-0613 that is updated to access the OpenAI functions. Define the model variable by calling the ChatOpenAI() method that contains the GPT model as the argument of the method:

model = ChatOpenAI(model="gpt-3.5-turbo-0613")The above code uses the GPT model that provides an output in a structured method like JSON with 25% off on the subscription. The update saves the costs of the tokens as the user gets more of them at a lower price($0.0015 per 1K tokens). The user can also use the latest model by typing the base name of the model which in our case is “gpt-3.5-turbo”:

Step 5: Getting OpenAI Functions

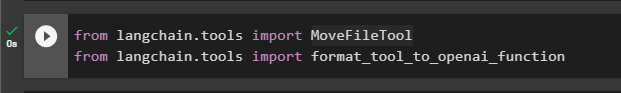

Once the language model is built successfully, simply import the libraries like MoveFileTool and format_tool_to_openai_function for building the tools. The MoveFileTool library is used to access the tool that can move the file from the source to the destination. The format_tool_to_openai_function tool can be used to access the OpenAI functions to provide answers to the questions from multiple sources:

from langchain.tools import MoveFileTool

from langchain.tools import format_tool_to_openai_function

Step 6: Building Tools

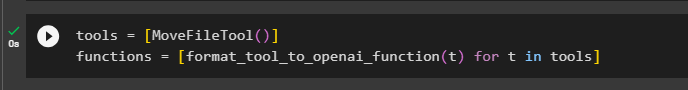

After that, configure the “tools” variable with the MoveFileTool() method and the “functions” variable with the format_tool_to_openai_function() method. The OpenAI function method contains the argument for getting the tools “t” if the function is called by the agent:

tools = [MoveFileTool()]

functions = [format_tool_to_openai_function(t) for t in tools]Calling the OpenAI functions will get the tools by using the for loop until the desired tool is accessed and used to get the output:

Step 7: Using OpenAI Functions With Model

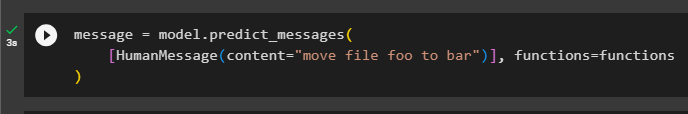

Use the HumanMessage() method with the content of the message as the argument and the functions argument in the predict_message() method:

message = model.predict_messages(

[HumanMessage(content="move file foo to bar")], functions=functions

)The message variable is configured by calling the predict_message() method with the model and the required arguments:

Step 8: Testing the Model

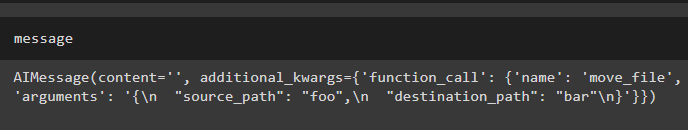

Print the message in a structured output by executing the name of the variable as mentioned below:

messageThe content stored in the variable is displayed in the screenshot below in a structured format like content and additional_kwargs. The OpenAI functions are called when the message variable is executed and uses the tool to move the file from the source “foo” to the destination “bar”:

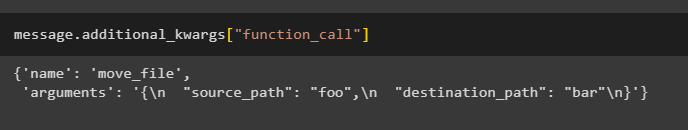

The user can also extract some specific content from the message variable by using the following code:

message.additional_kwargs["function_call"]The above code only returns the function_call part leaving the content part of the message variable as displayed in the screenshot below:

That’s all about the process of using LangChain tools as OpenAI functions.

Conclusion

To use the LangChain tools as the OpenAI functions, simply install the frameworks to get the dependencies on the Python notebook. After that, set up the OpenAI environment and then import the libraries for building the language model to build it using the “gpt-3.5-turbo-0613” version. Import the dependencies for designing and configuring the tools for the model that can call the OpenAI functions.

Simply integrate the component to configure the message variable with the input question asked by the user. At the end, test the tools and functions to get the structured output from the contents stored in the message variable. This guide has elaborated on the process of using the tools in the LangChain that can call the OpenAI functions.