LangChain enables the developers to design agents for extracting information using language models like OpenAI and others. Agents are responsible for handling the intermediate processes like thoughts, actions, observations, and others. They do need multiple tools or toolkits with various tools to perform all the tasks to get the required outputs. The LangChain framework provides built-in tools for developers and also enables them to build their custom tools.

How to Create Custom Tools in LangChain?

Agents are required to invoke the language model to perform the tasks to extract data asked by the user. The tasks in the language models need to be performed using tools and they can be customized using LangChain by providing the following arguments:

name: It is a string value to specify the identification of the tool for the agent to use it whenever required

description: Another string value that explains to the model what are its functionalities and when to call it

return_direct: It is a boolean value (True/False) to get the output with or without the structure and it is false by default.

args_schema: Recommended but optional BaseModel that provides additional information or validation for the parameters.

The tools are the functions that allow the models to interact with humans and to learn the process of customizing them, simply follow the listed steps. The code to build custom tools is executed in Google Colaboratory and it can be accessed here:

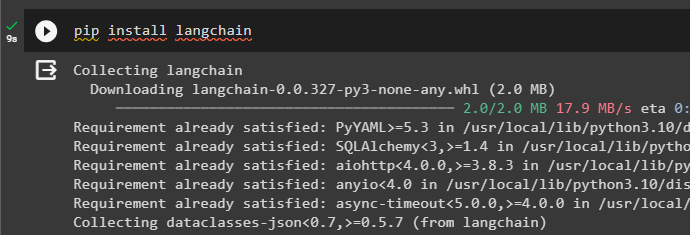

Step 1: Install Modules

First, open the Python notebook and execute the following command to install the LangChain framework:

pip install langchain

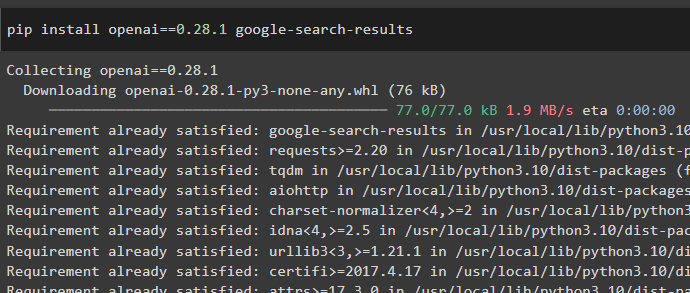

Now, install the google-search-results module using the OpenAI platform to build the language model that can answer questions from the internet:

pip install openai==0.28.1 google-search-results

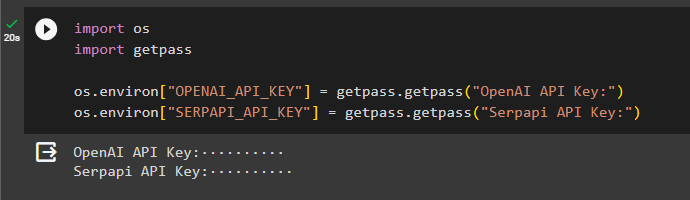

Step 2: Setup Environments

The second step is to set up the environment using the API keys from the OpenAI and SerpAPI keys:

import os

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")Execute the above code, then type the API keys one by one, and hit enter to complete the process:

Step 3: Building Language Model

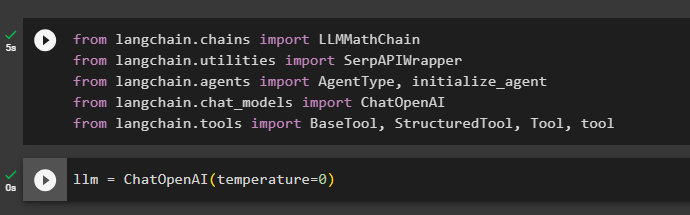

Before building the tools for the agent, it is required to configure the language model which can use the tools to perform some tasks. To build the language model, import the required libraries from the LangChain dependencies:

from langchain.chains import LLMMathChain

from langchain.utilities import SerpAPIWrapper

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, toolNow, build the language model by defining the llm variable and initializing it with the ChatOpenAI() method:

llm = ChatOpenAI(temperature=0)

Method 1: Configure New Tools

The users can build tools from scratch to meet their required expectations or functionalities and there are multiple ways to do that which are listed below:

- Using Tool Dataclass

- Using Subclasses

- Using the Tool Decorator

Using Tool Dataclass

To build the customized tool, use the tool data class with arguments like func, name, and description:

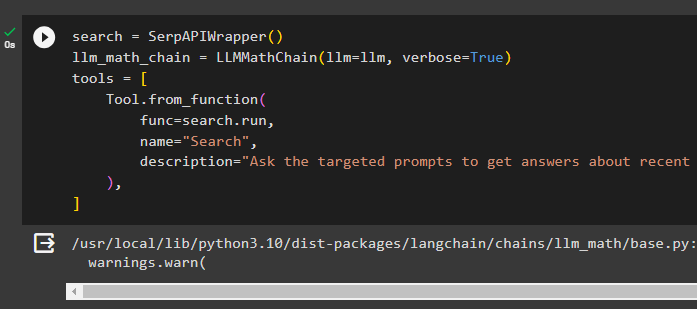

search = SerpAPIWrapper()

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

tools = [

Tool.from_function(

func=search.run,

name="Search",

description="Ask the targeted prompts to get answers about recent affairs"

),

]

Building another tool for the agent as the agent has multiple tasks to perform during the process. It is difficult for the agent to perform all the steps using one agent or limited agent so it should have access to all the tools required:

from pydantic import BaseModel, Field

class CalculatorInput(BaseModel):

question: str = Field()

tools.append(

Tool.from_function(

func=llm_math_chain.run,

name="Calculator",

description="helpful for answering questions about mathematical problems",

args_schema=CalculatorInput

)

)The above code builds the tools with the name “Calculator” and its descriptions explain that it can solve mathematical problems. The args_schema explains the tool further and provides clarity for the agent to use it when a mathematical input comes:

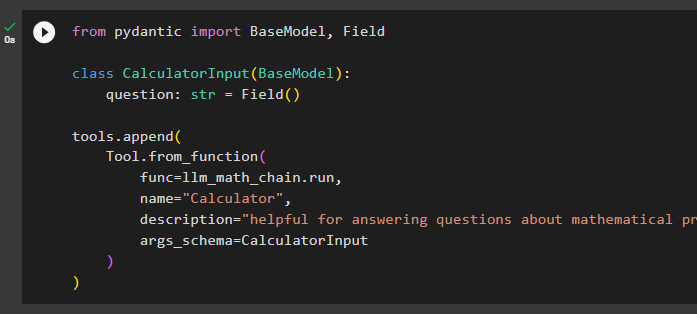

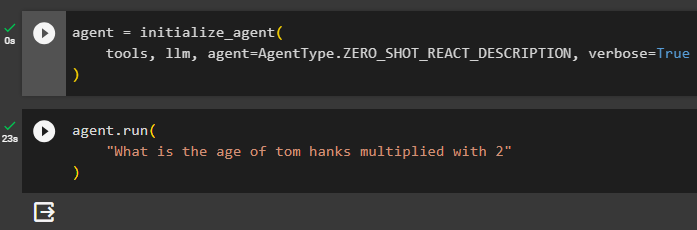

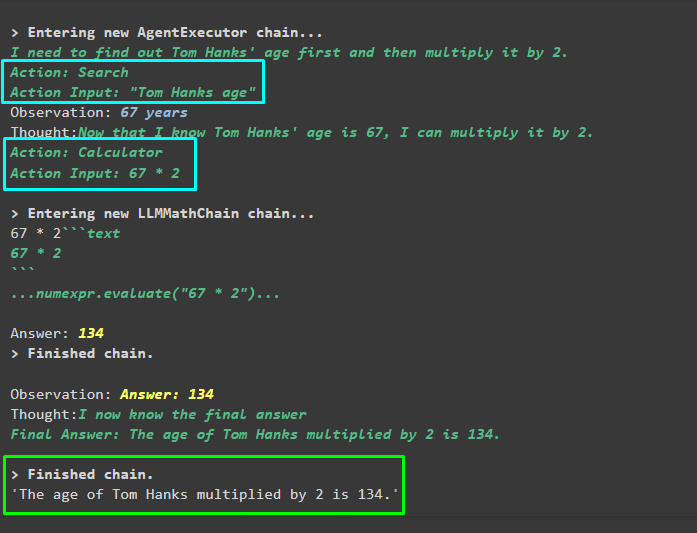

Now, initialize the agent using the customized tools, language model, type of the agent, and the verbose argument:

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)Execute the following code to run the agent with the input question that asks the age of Tom Hanks and then multiply it by 2:

agent.run(

"What is the age of tom hanks multiplied with 2"

)

Output

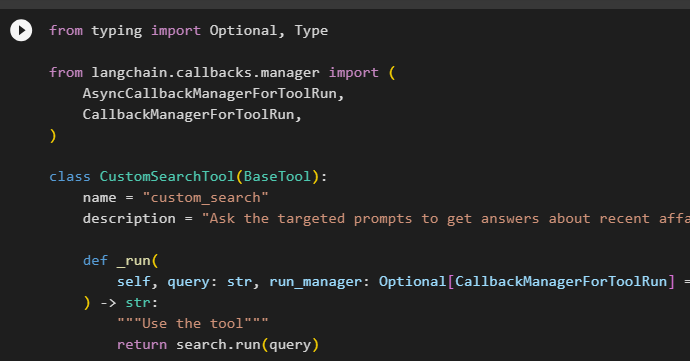

Using BaseTool Subclass

Another approach for building the custom tools is using the BaseTool to get an optimized grip over the complete process:

from typing import Optional, Type

from langchain.callbacks.manager import (

AsyncCallbackManagerForToolRun,

CallbackManagerForToolRun,

)

class CustomSearchTool(BaseTool):

name = "custom_search"

description = "Ask the targeted prompts to get answers about recent affairs"

def _run(

self, query: str, run_manager: Optional[CallbackManagerForToolRun] = None

) -> str:

"""Use the tool"""

return search.run(query)

async def _arun(

self, query: str, run_manager: Optional[AsyncCallbackManagerForToolRun] = None

) -> str:

"""Use the tool asynchronously"""

raise NotImplementedError("custom_search does not support async")

#configuring the calculator tools which will be called for mathematical queries

class CustomCalculatorTool(BaseTool):

name = "Calculator"

description = "helpful for answering questions about mathematical problems"

args_schema: Type[BaseModel] = CalculatorInput

def _run(

self, query: str, run_manager: Optional[CallbackManagerForToolRun] = None

) -> str:

"""Use the tool"""

return llm_math_chain.run(query)

async def _arun(

self, query: str, run_manager: Optional[AsyncCallbackManagerForToolRun] = None

) -> str:

"""Use the tool asynchronously"""

raise NotImplementedError("Calculator does not support async")The above code builds the CustomSearchTool and CustomCalculatorTool classes and subclasses the BaseTool. Both these tool classes are configured with the components of the tools, run(), and arun() methods. The run method propagates the callbacks to the tools and chains by providing multiple options like synchronous and asynchronous approaches. The arun() method provides the asynchronous approach to work with each step individually:

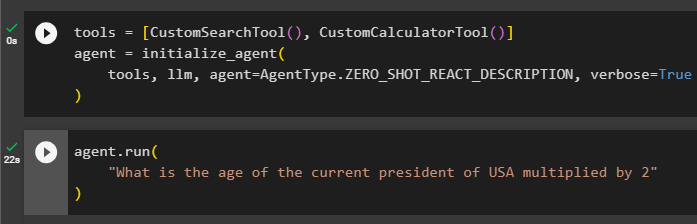

Use the above-configured classes to build the tools using CustomSearchTool() and CustomClaculatorTool() methods. After that, initialize the agent using the tools, llm, type of the agent, and the verbose arguments to get the complete structure of the agent in the output:

tools = [CustomSearchTool(), CustomCalculatorTool()]

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)Test the agent by calling the run() method with the input string to get the information from the language model:

agent.run(

"What is the age of current president of USA multiplied by 2"

)

Output

The following screenshot displays the output extracted using the tools configured with the BaseModel subclass:

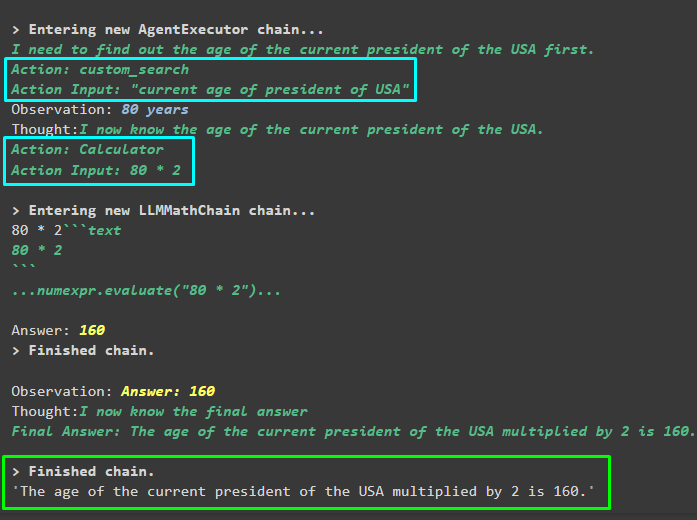

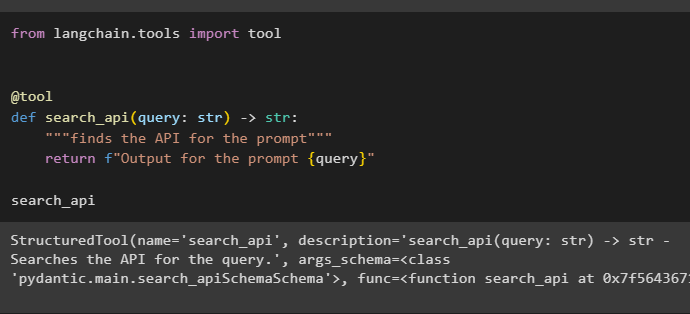

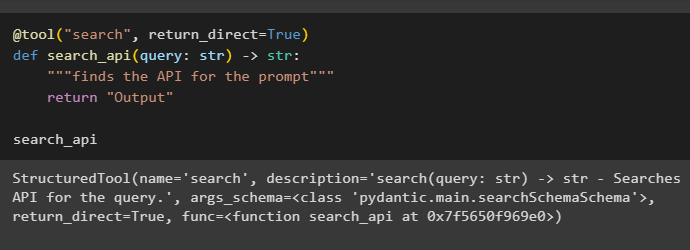

Using the Tool Decorator

The third approach for building new custom tools is using the @tool decorator which is provided by the LangChain framework:

from langchain.tools import tool

#Configure tools with the name of the method

@tool

def search_api(query: str) -> str:

"""finds the API for the prompt"""

return f"Output for the prompt {query}"

search_apiThe decorator uses the function name as the name of the tool which can be overridden by the string in the argument. The description of the tools will be the docstring of the decorator’s function:

The user can build the customized tool using the @tool decorator with the arguments like the name of the tool and return_direct:

@tool("search", return_direct=True)#Configure tools with the name and return_direct arguments

def search_api(query: str) -> str:

"""finds the API for the prompt"""

return "Output"

search_api

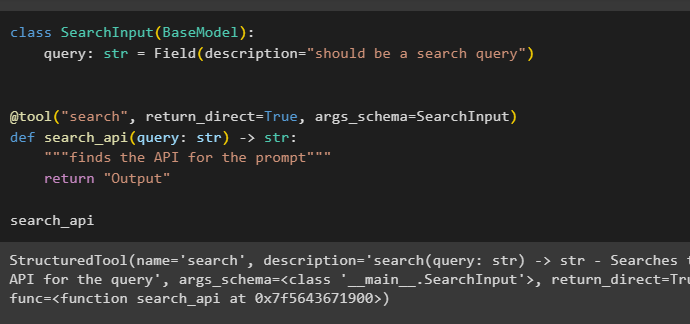

The user can also define the BaseModel subclass to configure the SearchInput class with its arguments and add more information with the decorator using args_schema:

class SearchInput(BaseModel):

query: str = Field(description="should be a search query")

@tool("search", return_direct=True, args_schema=SearchInput)#Configure tools with multiple arguments and BaseMoodel

def search_api(query: str) -> str:

"""finds the API for the prompt"""

return "Output"

search_api

Method 2: Custom Structured Tools

The second method that can be used to build the new tools is with the structured tools and the following approaches are used in this method:

- Using StructuredTool Dataclass

- Using Subclass BaseTool

- Using the Decorator

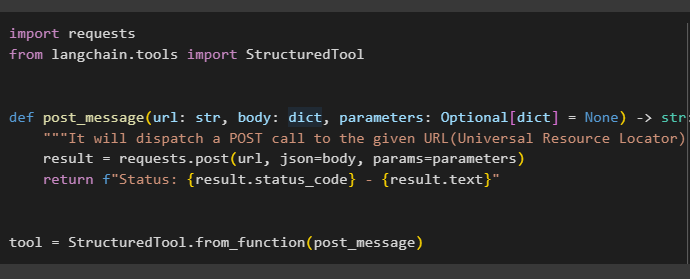

Using StructuredTool Dataclass

Some tools require more structured arguments or information for the agent to choose them efficiently. To build these tools, simply use the StructuredTool imported from the LangChain dependencies which contain the tools with clear arguments:

import requests

from langchain.tools import StructuredTool

def post_message(url: str, body: dict, parameters: Optional[dict] = None) -> str:

"""It will dispatch a POST call to the given URL(Universal Resource Locator) with the provided content and arguments"""

result = requests.post(url, json=body, params=parameters)

return f"Status: {result.status_code} - {result.text}"

tool = StructuredTool.from_function(post_message)The above code builds a structured tool using the post_message() method containing multiple arguments to get as much detail as possible. It also provides a clearer description for the agent to understand its purpose:

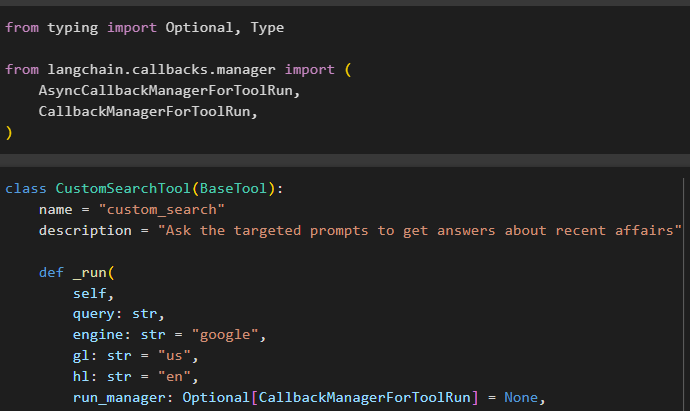

Using Subclass BaseTool

Another approach for creating the structured tools is subclassing the BaseTool after importing the class CallBack and AsyncCallBack managers from LangChain:

from typing import Optional, Type

from langchain.callbacks.manager import (

AsyncCallbackManagerForToolRun,

CallbackManagerForToolRun,

)Once the libraries are imported, simply define the CustomSearchTool and CustomSearchTool classes with the BaseTool as the subclass:

class CustomSearchTool(BaseTool):

name = "custom_search"

description = "Ask the targeted prompts to get answers about recent affairs"

def _run(

self,

query: str,

engine: str = "google",

gl: str = "us",

hl: str = "en",

run_manager: Optional[CallbackManagerForToolRun] = None,

) -> str:

"""Use the tool"""

search_wrapper = SerpAPIWrapper(params={"engine": engine, "gl": gl, "hl": hl})

return search_wrapper.run(query)

async def _arun(

self,

query: str,

engine: str = "google",

gl: str = "us",

hl: str = "en",

run_manager: Optional[AsyncCallbackManagerForToolRun] = None,

) -> str:

"""Use the tool asynchronously"""

raise NotImplementedError("custom_search does not support async")

class SearchSchema(BaseModel):

query: str = Field(description=" search query can be stated here")

engine: str = Field(description=" search engine can be stated here")

gl: str = Field(description="country code can be stated here")

hl: str = Field(description="language code can be stated here")

#defining the CustomSearchTool class with the base model as its argument

class CustomSearchTool(BaseTool):

name = "custom_search"

description = "Ask the targeted prompts to get answers about recent affairs"

args_schema: Type[SearchSchema] = SearchSchema

#defining the arguments for the tools so the agent can easily recognize upon the requirement

def _run(

self,

query: str,

engine: str = "google",

gl: str = "us",

hl: str = "en",

run_manager: Optional[CallbackManagerForToolRun] = None,

) -> str:

"""Use the tool"""

search_wrapper = SerpAPIWrapper(params={"engine": engine, "gl": gl, "hl": hl})

return search_wrapper.run(query)

#defining the arguments used to configure the tools and the steps they should take to solve the problems

async def _arun(

self,

query: str,

engine: str = "google",

gl: str = "us",

hl: str = "en",

run_manager: Optional[AsyncCallbackManagerForToolRun] = None,

) -> str:

"""Use the tool asynchronously"""

raise NotImplementedError("custom_search does not support async")Define the run() and arun() arguments explaining the purpose and function of both the tools in detail. These methods contain information like when to invoke the tool and which search engine to use for searching over the internet. It also contains the callback managers to help the tool stop generating the information and start displaying it on the screen. The code also defines the SearchSchema class to explain all the additional parameters used in building the tools:

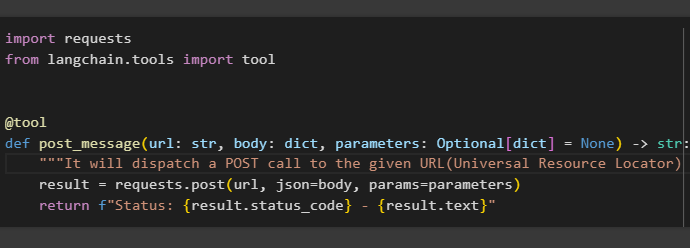

Using the Decorator

The @tool decorator can also be used to build the structured tools by defining the name of the function with its arguments and description of the tool:

import requests

from langchain.tools import tool

@tool

def post_message(url: str, body: dict, parameters: Optional[dict] = None) -> str:

"""It will dispatch a POST call to the given URL(Universal Resource Locator) with the provided content and arguments"""

result = requests.post(url, json=body, params=parameters)

return f"Status: {result.status_code} - {result.text}"

That’s all about building the new custom tools in LangChain with different arguments and structured tools with more information. The next methods explain the process of using the existing tools and adding more specifications according to the problem.

Method 3: Modify Existing Tools

The user can modify the existing tools by adding more information or a complete tool with it. Sometimes a user needs to perform tasks that require multiple tools of some part of the additional tool. So the user can simply integrate both of them and perform the tasks accordingly.

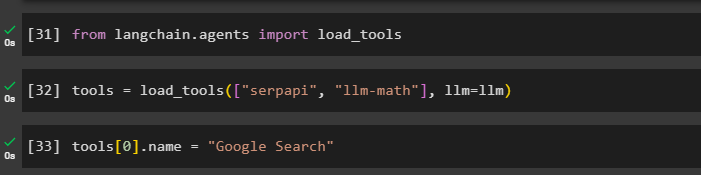

Loading the Existing Tools

Import the library load_tool from the LangChain framework to get started with the process:

from langchain.agents import load_toolsLoad the required tools to define the tool’s variable with the language model to execute the agent:

tools = load_tools(["serpapi", "llm-math"], llm=llm)Add a search engine in the tools to modify the existing ones with the additional information or restriction:

tools[0].name = "Google Search"

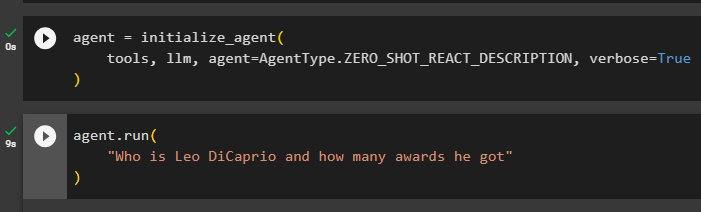

Building and Testing the Agent

After integrating and modifying the tools, simply initialize the agent using the tools, llm, agent, and verbose parameters:

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)Run the agent with the input string to get the information from the Google Search:

agent.run(

"Who is Leo DiCaprio and how many awards he got"

)

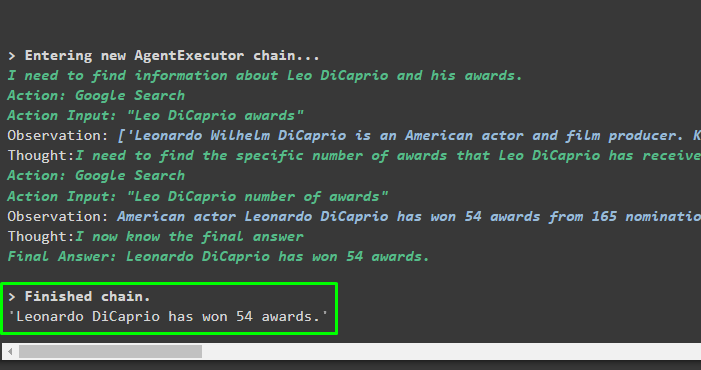

Output

The agent has used the Google Search to extract the information about the query provided by the user:

Method 4: Defining the Priorities Among Tools

Another method to customize an existing tool is to make one more important than the other one like the following code suggests:

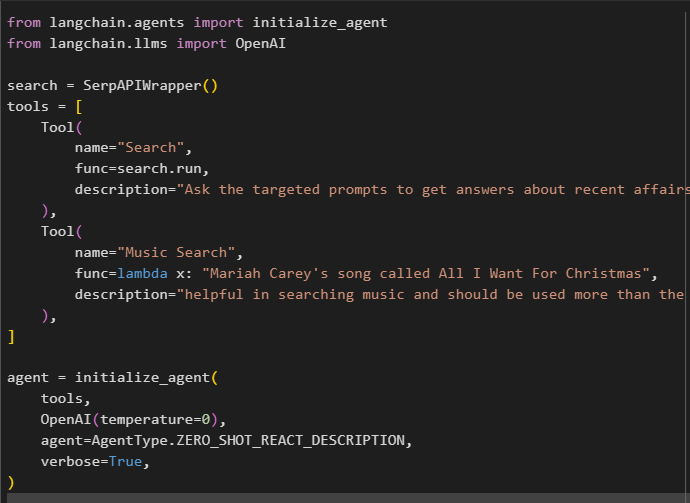

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

search = SerpAPIWrapper()

tools = [

Tool(

name="Search",

func=search.run,

description="Ask the targeted prompts to get answers about recent affairs",

),

Tool(

name="Music Search.",

func=lambda x: "Mariah Carey's song called All I Want For Christmas",

description="helpful in searching music and should be used more than the other tools for queries related to Music, like 'tell me the most viewed song in 2022' or 'about the singer of a song'",

),

]

agent = initialize_agent(

tools,

OpenAI(temperature=0),

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

)The above code has defined two tools like search and Music Search as both have the similar job to search the query over different sources. However, the user has prioritized Music Search over the search tool if the query is related to music albums or songs. After that, initialize the agent with all the components to make it ready for execution:

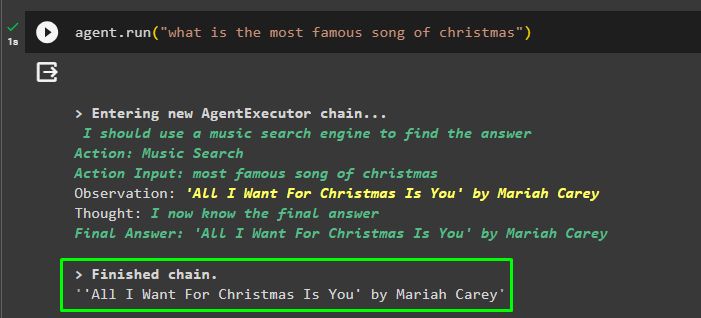

Call the run() function with the query in its argument to get the famous song for Christmas:

agent.run("what is the most famous song of Christmas")The agent has used the Music Search over the simple search tool to extract the song asked by the user:

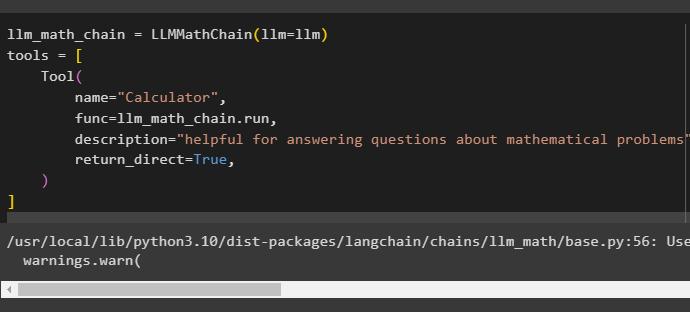

Method 5: Using Tools to Return Directly

Mostly, the user required a direct response without any external information which can be extracted using the return_direct parameter. The user can pass it while defining the tool as mentioned in the following code:

llm_math_chain = LLMMathChain(llm=llm)

tools = [

Tool(

name="Calculator",

func=llm_math_chain.run,

description="helpful for answering questions about mathematical problems",

return_direct=True,

)

]

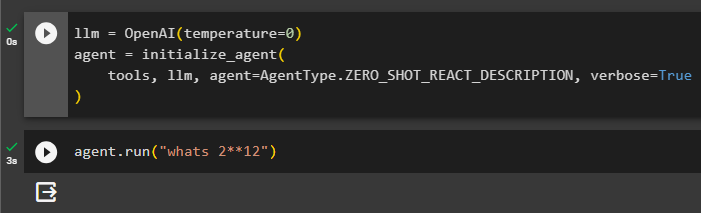

Build the language model and initialize the agent:

llm = OpenAI(temperature=0)

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)Run the agent to get a direct answer of the question which is what is the 2 to the power of 12:

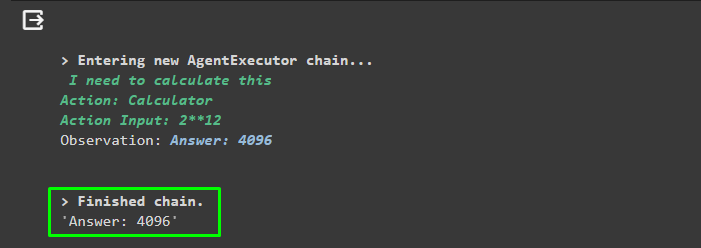

agent.run("whats 2**12")

Output

The following snippets shows the agent has extracted the answer directly without multiple steps to get multiple observations and others. The question was a mathematical one that could be answered directly and the agent was not required to take much time. It provides an answer to the question directly so the user can move on with other questions quickly:

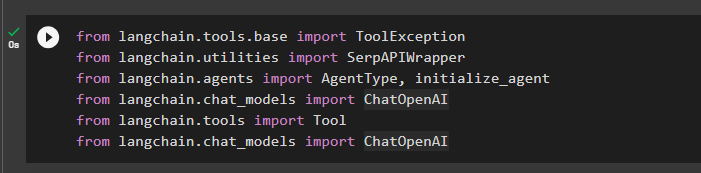

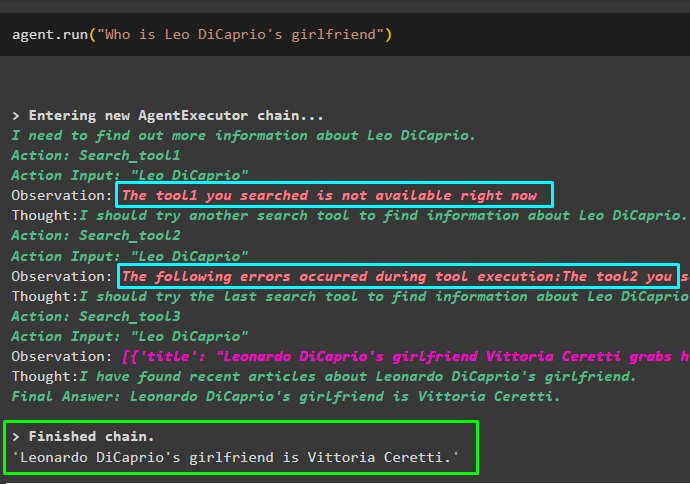

Bonus Tip: Handling Tool Errors

Custom tools can also be configured to manage the errors or exceptions that occur during the process of agent execution. If the agent faces an error during its execution, it simply stops working, generates the error, and discards all the information. The agent has not delivered any information and time has been wasted with the power as well. To solve this, LangChain dependencies provide the ToolException, handle_tool_error, and other libraries:

from langchain.tools.base import ToolException

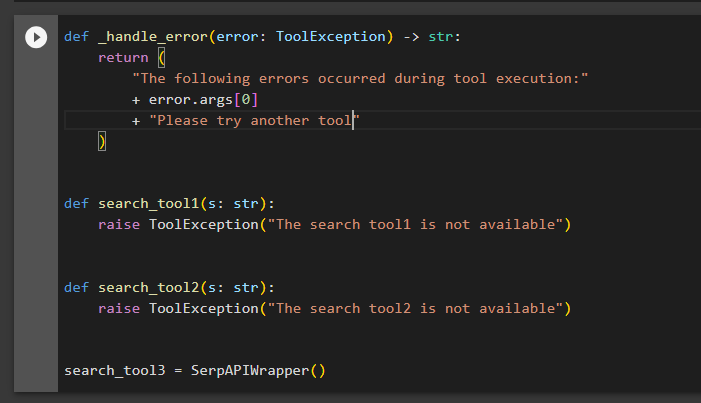

Define the _handle_error() method with the exception to handle in its arguments and return the error. After returning the error, it doesn’t stop, however, it asks another tool to carry on with the process until the correct tool is located:

def _handle_error(error: ToolException) -> str:

return (

"The following errors occurred during tool execution:"

+ error.args[0]

+ "Choose or opt other tools"

)

def search_tool1(s: str):

raise ToolException("The tool1 you searched is not available right now")

def search_tool2(s: str):

raise ToolException("The tool2 you searched is not available right now")

search_tool3 = SerpAPIWrapper()

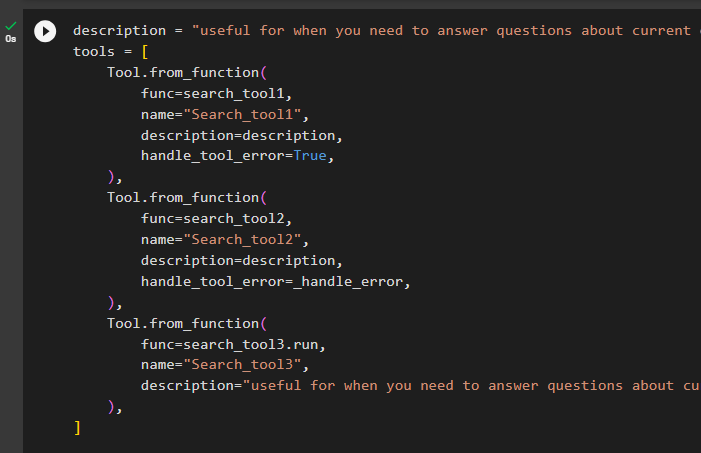

The following code builds 3 search tools and only one of them can extract answers and others can handle the error. After that, initialize the agent with its required arguments:

description = "Ask the targeted prompts to get answers about recent affairs and it should be given priority"

tools = [

Tool.from_function(

func=search_tool1,

name="Search_tool1",

description=description,

handle_tool_error=True,

),

Tool.from_function(

func=search_tool2,

name="Search_tool2",

description=description,

handle_tool_error=_handle_error,

),

Tool.from_function(

func=search_tool3.run,

name="Search_tool3",

description="Ask the targeted prompts to get answers about recent affairs",

),

]

agent = initialize_agent(

tools,

ChatOpenAI(temperature=0),

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

)

Run the agent with the question:

agent.run("Who is Leo DiCaprio's girlfriend")The agent has tried the first two tools which weren’t capable of searching on the internet so it asked the one that could search for the answer. The third tool has answered the call to extract the information from the internet and displays it on the screen as displayed in the screenshot below:

That’s all about the process of building the new tools from scratch or changing the existing tools in LangChain.

Conclusion

To create custom tools in LangChain, the user can create new tools or make do with the existing ones by changing them to their tasks. Creating new tasks can be done using the Data class, BaseTool, and tool Decorator approaches to build from scratch. Using the existing tools to make them according to your problem can be done by modifying, prioritizing, and returning the answer directly. The user can also customize the tools to handle the parsing errors or exceptions to keep the agent going with the process.