The LangChain framework contains the dependencies for building the agents to help language models like LLM solve different queries. Large Language Models are very powerful, however, they lack many aspects that a simple computer can easily go through like simple reasoning, logic, and others. The LLMs need agents to help them through these specific tasks with LLM-optimizing abilities and tools.

Agents are computer programs that simplify the logic and reason for the language models using different tools. The user can configure multiple tools with the agent to solve particular problems like searching, calculating, and many others. Agents can’t always parse the queries given to the LLMs as they don’t have the required tools or have no idea how to use a particular tool.

Quick Outline

This guide explains the following sections:

How to Add Human Validation to Any Tool in LangChain

- Step 1: Install Modules

- Step 2: Setup OpenAI Environments

- Step 3: Building the Tool

- Step 4: Adding Human Validation

- Step 5: Configuring Human Validation

- Step 6: Building the Language Model

- Step 7: Testing the Agent

How to Add Human Validation to Any Tool in LangChain?

Getting an outside intervention while choosing the tools for different jobs can help optimize the performance ability of the language model. If the agent gets confused while choosing the tool for a particular job, it can simply ask the humans to validate the tool. This guide uses Python script to go through the process using the Google Collaboratory Notebook and the complete script is given here:

To learn the process of adding a human validation to any tool in LangChain, simply go through the listed steps:

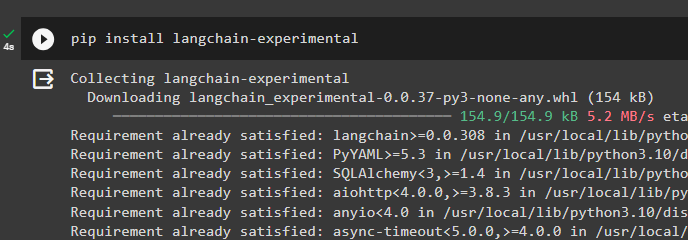

Step 1: Install Modules

First, install the dependencies for the LangChain using the langchain-experimental to build tools for the agents or add human validations for them:

pip install langchain-experimental

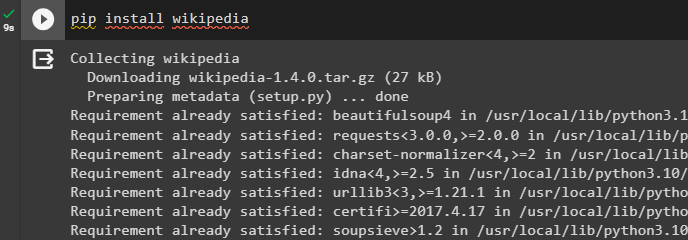

Get the Wikipedia dependencies to configure the tool validation process using the pip command:

pip install wikipedia

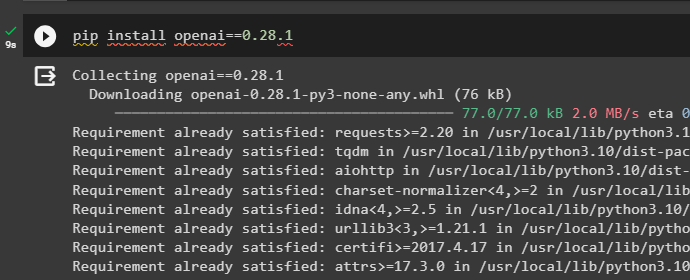

Another module required to complete the process is OpenAI which can be used to build language or chat models like LLMs, etc:

pip install openai==0.28.1

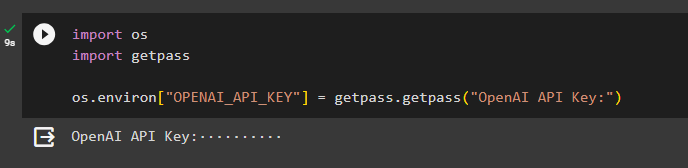

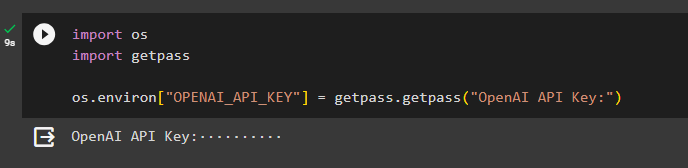

Step 2: Setup OpenAI Environment

After getting the dependencies from the modules, simply set up the environment for building and using the tools for the agent. The environment can be set up using the API key from the OpenAI account and passing it to the model using the following code:

import os

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 3: Building the Tool

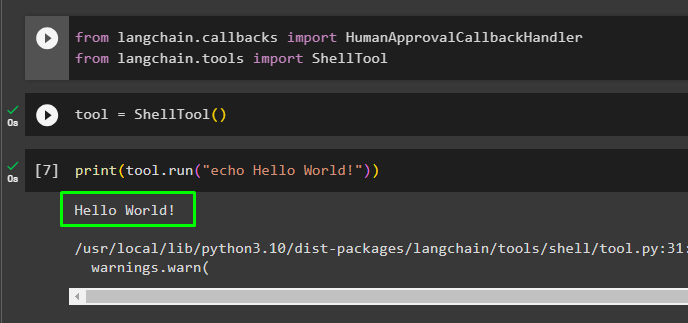

Once the setup is complete, use the installed modules to import the libraries like ShellTool and HumanApprovalCallbackHandler. The ShellTool is the tool we will be using in this guide and then adding a validation aspect to it. The HumanApprovalCallbackHandler is the library that enables the agent to ask the human to use the tool for any specific job:

from langchain.callbacks import HumanApprovalCallbackHandler

from langchain.tools import ShellToolCall the ShellTool() method to initialize the tool variable that can be added to the agent as the argument:

tool = ShellTool()Simply print a line that says Hello World! String after running the tool with the run() method:

print(tool.run("echo Hello World!"))Running the above code displays the Hello World! string with the warning message that the ShellTool has no safeguards by default, so use it carefully and at your own risk. It means that we can’t leave it to the agent to decide when to use the tool. Instead, it requires a human intervention that chooses the use of this tool whenever it is secured at have no other option:

Step 4: Adding Human Validation

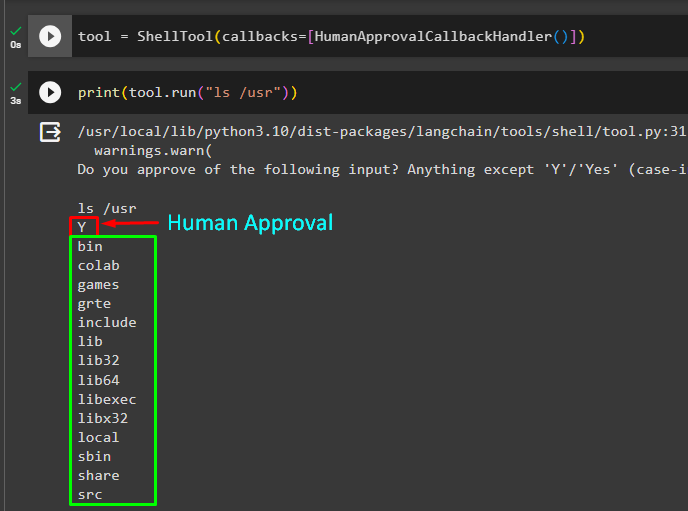

As the tool is not safe to use, we need someone to make the hard choice of using the ShellTool(). For that or many different reasons, the developers can add the HumanApprovalCallbackHandler() method in the argument of the ShellTool() method:

tool = ShellTool(callbacks=[HumanApprovalCallbackHandler()])Simply print the list of the contents of the directory using the tool with the run() method:

print(tool.run("ls /usr"))Running the above code asks the user to validate the use of the tool by typing “Y/Yes” and every other key refers to no. So Yes means that the tool is allowed to use and extract the contents of the directory as displayed in the screenshot below:

Run the tool again to get the contents of the private directory using this code:

print(tool.run("ls /private"))Now, the tool asks the human to use the tool and the answer is no, so it simply generates an error message that there is no tool available to get this work done:

Type yes, granting permission to use the tool for getting the directory will extract the output for the user on the screen:

Step 5: Configuring Human Validation

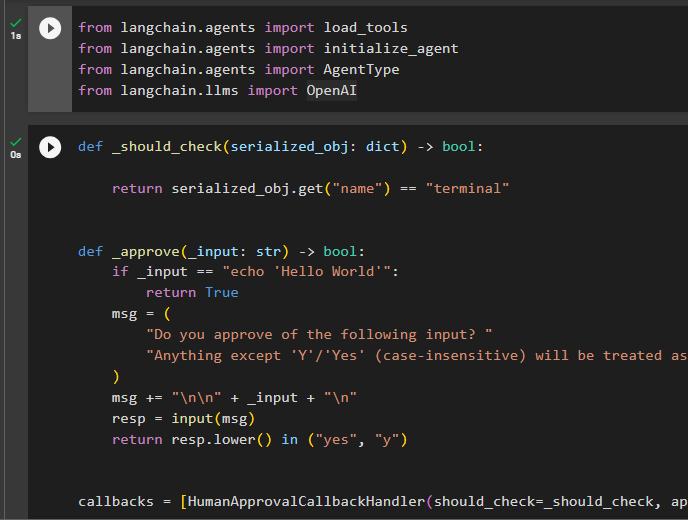

There are many instances in which the agent has multiple tools and the user can’t possibly validate the use of each tool. The LangChain allows the user to choose the validation process for specific tools and the process needs different libraries to be imported using the following code:

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.agents import AgentType

from langchain.llms import OpenAIThe following code is to configure the validation process for human intervention for using the tools:

def _should_check(serialized_obj: dict) -> bool:

return serialized_obj.get("name") == "terminal"

def _approve(_input: str) -> bool:

if _input == "echo 'Hello World'":

return True

msg = (

"Do you approve of the following input"

"Anything except 'Y'/'Yes' (case-insensitive) will be treated as a no"

)

msg += "\n\n" + _input + "\n"

resp = input(msg)

return resp.lower() in ("yes", "y")

callbacks = [HumanApprovalCallbackHandler(should_check=_should_check, approve=_approve)]The above code uses two methods _should_ceck() and _approve() methods that enable the user to choose the tool. The _approve() method simply uses the input to be printed on the screen which does not require any reasoning or searching from different sources. If the model or agent needs the tools for some specific task, then it will ask the user to confirm by typing “Y/Yes” and any other key will be considered a no response:

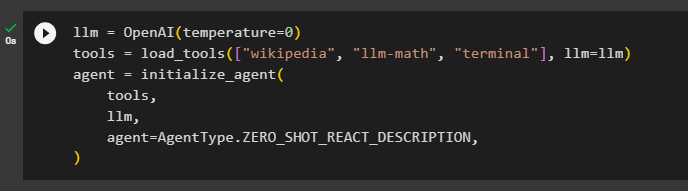

Step 6: Building the Language Model

Once the configuration of the validation process is complete, build the language model using the OpenAI() method and tools like Wikipedia, llm-math, and terminal. After that, design the agent by integrating all the components as the argument while initializing the agent:

llm = OpenAI(temperature=0)

tools = load_tools(["wikipedia", "llm-math", "terminal"], llm=llm)

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

)

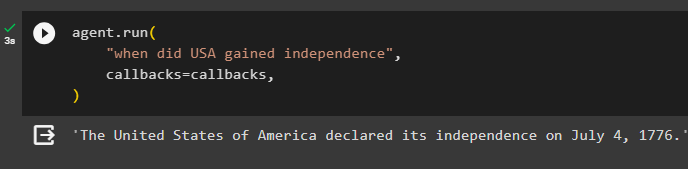

Step 7: Testing the Agent

Now, test the agent by calling the run() method with a simple string to print with the callback argument:

agent.run(

"when did USA gained independence",

callbacks=callbacks,

)The agent has not asked the user to validate the tool like a search tool to get the answer asked by the user:

Run the agent again to print a string on the screen:

agent.run("print 'Hello World' in the terminal", callbacks=callbacks)The agent has returned the result on the screen again without asking the user:

Lastly, run the agent to get the list of contents for a specific directory:

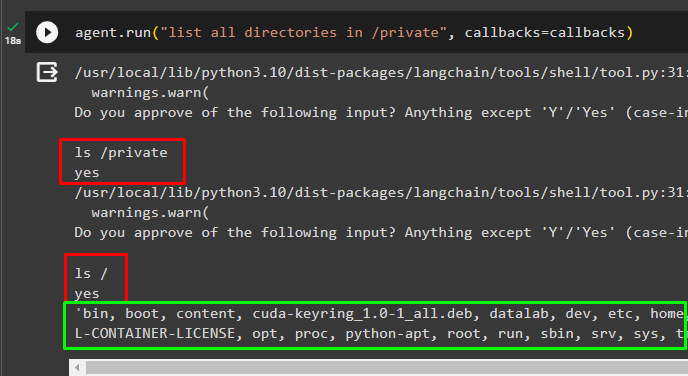

agent.run("list all directories in /private", callbacks=callbacks)This time the agent asks the user for input and the user can say yes to continue with the process or no to terminate the agent:

That’s all about the process of adding human validation to any tool in the LangChain framework.

Conclusion

To add a human validation to any tool in langChain, install the modules to get its dependencies and libraries from these dependencies. After that, set up the environment for using the language model and then build the tools to solve different problems. Add human validation, so the user can have its input each time a tool is going to be used to avoid getting security risks or others.

The user can always configure the validation process to specify certain aspects when human validation is required. Asking the human to validate each tool can affect the performance and optimization of the process entirely. Agents have multiple tools to go through the complete process that’s why it is required to configure the validation process. This guide has elaborated on the process of adding human validation to any tool in Langchain.